|

and it's great to see you here! |

|

|

I am a robotics and AI engineer at Maven Robotics working on perception and autonomy systems for mobile embodiments.

Previously, I was a robotic systems master's student at the Robotics Institute, Carnegie Mellon University.

In my current program, we worked with Koppers Inc.

on devising an industrial cleaning robot

as a part of the sponsored capstone project, where I primarily led the perception, autonomy and planning subsystems.

Presently, I am working on active perception in robotic manipulation tasks at the Momentum Robotics Lab

under the guidance of Prof. Jeff Ichnowksi.

Contact Links: |

|

|

|

|

|

February 2025 - May 2025 Working on Visual Inertial SLAM for Pepper Robot, and integrating with navigation APIs. I also assist students and researchers to work with robots including Kinova, Fetch and Misty robots. |

|

September 2024 - June 2025 Working on adaptive actions with active perception for feedback in closed-loop robotic manipulation tasks. Implemented Koopman Operator Rollout for local, and a VLM for global planning for manipulation task recovery from 8 failure states with an avg. 73% success rate. |

|

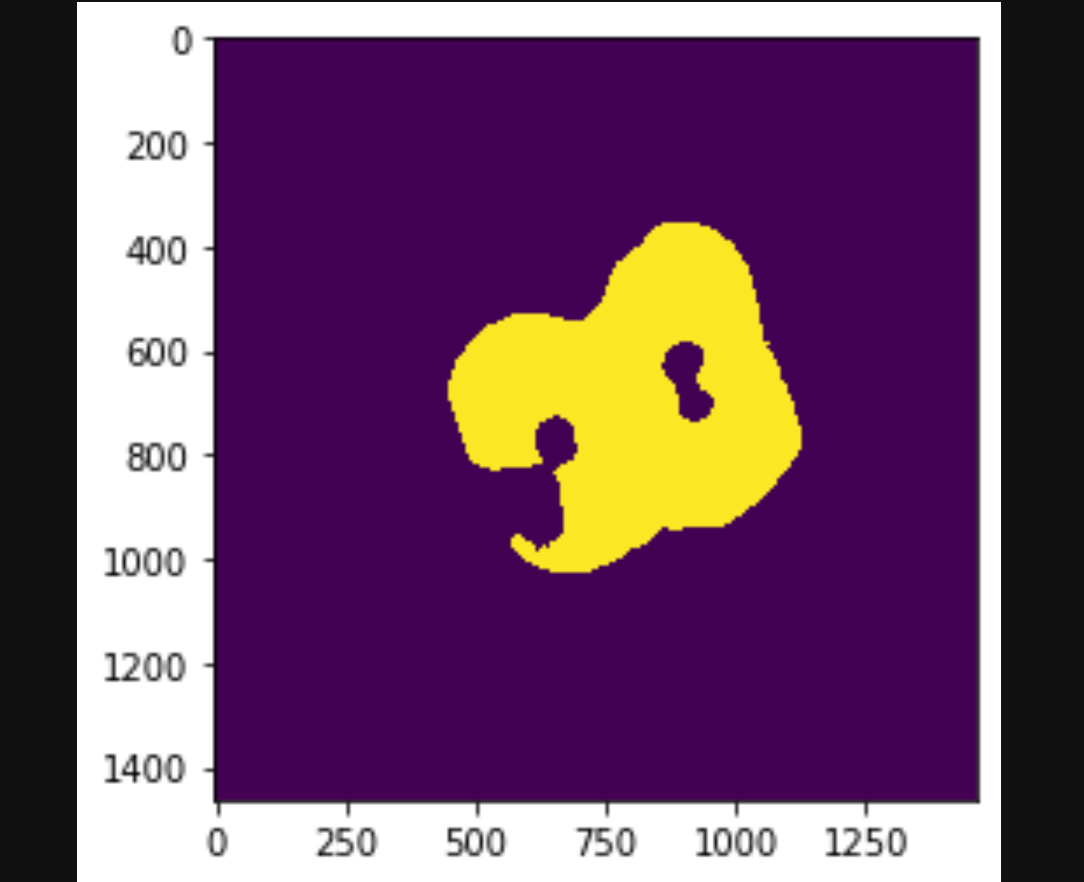

May 2024 - August 2024 Helped improve visual analysis and data processing of 3D CT scanning of metal-ion batteries for non-destructive testing. Aligned cylindrical Metal-Ion 2.5D cell scans using morphological operations and developed a parameter auto-tuner using heuristic search to automate the pipeline. Executed multiple CI/CD GitHub Actions development cycles to ensure real-time compute optimization with CUDA and production-ready code deployment. |

|

January 2022 - December 2022 As a part of the initial founding team, juggled with deployments and experimentations to figure out valuable deliverables to clients. Architected and implemented data acquisition pipelines, enabling initial deployments and driving $200K+ in funding. Designed the systems for efficient compute allocation for client-server deployments, optimizing code for real-time performance. |

|

April 2020 - September 2021 Led the development of a vending cart solution, fitted with a fridge and solar panel, to solve the problem of preserving farm produce for hawking vendors. Engineered the hybrid controller based dual-axis solar tracker and improved power acquisition efficiency by 25.1% over the static model, and with a runtime of >8 hrs in deployment. Presented the final system to the government officials, which led to a product roll-out by startup adoption. |

|

December 2018 - April 2021 Lead of the university’s Unmanned Ground Vehicle Robotics Team, managing personnel and logistics. Led the development of the UGVs Minotaur (2019) and Centaur (2020), working on embedded, controls and perception systems. As a team, we secured 5th Position in Cyber Security Challenge and 9th overall at Intelligent Ground Vehicle Competition (IGVC) 2019 held at Oakland University, Michigan. |

|

|

|

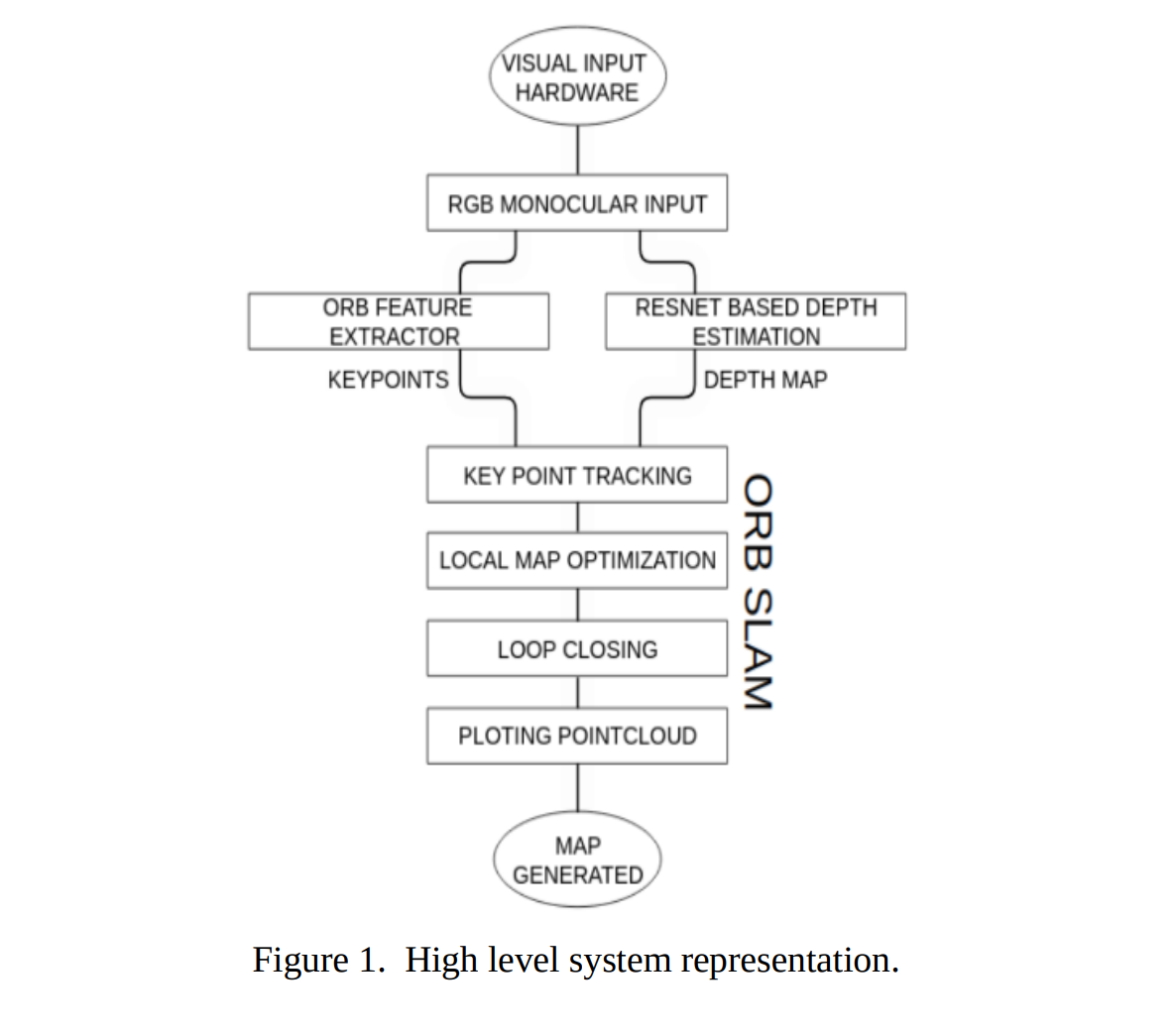

Yatharth Ahuja, Tushar Nitharwal, Utkarsh Sundriyal, S. Indu, A. K. Mandpura Journal of Images and Graphics (10th Edition) 2022 Effort to exploit visual information from a monocular input to derive depth information and combine it with the keypoints extracted from monocular input to be processed by ORB SLAM. |

|

Yatharth Ahuja, Ghanvir Singh, Sushrut Singh, Suraj Bhat, S. K. Saha International Conference on Electrical, Computer, Communications and Mechatronics Engineering (ICECCME) 2022 A system constructed for renewable energy harvesting techniques to a mobile street vending cart solution. The strategy of control deployed is that of a Hybrid Controller, which is a novel combination of Astronomical equations based on open-loop control and Differential Flatness based control over the tracking movement of the photovoltaic panel used. |

|

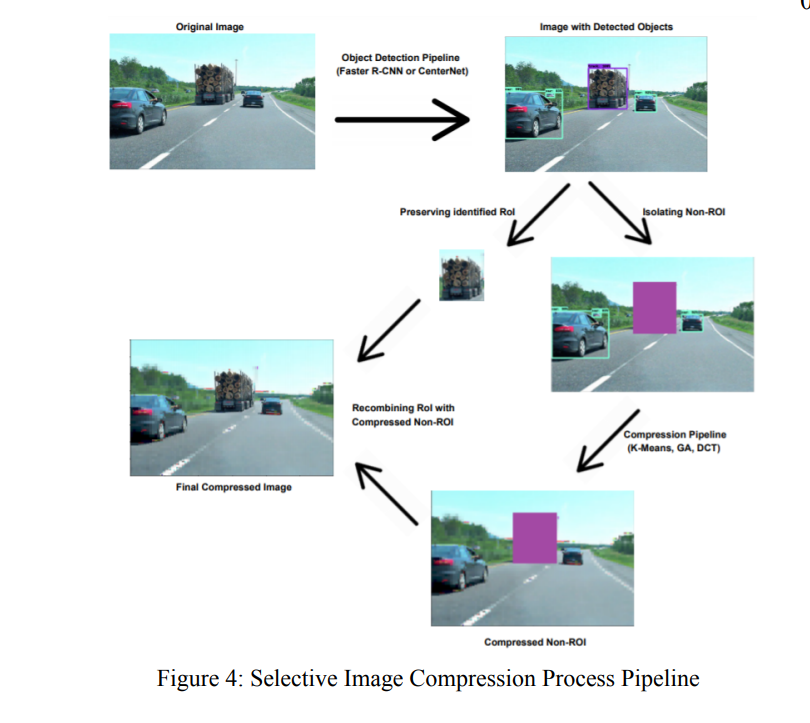

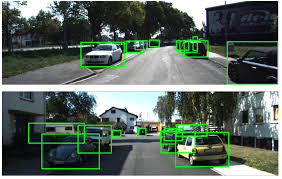

Yatharth Ahuja, Shreyan Sood XXIII Symposium on Image, Signal Processing and Artificial Vision (STSIVA) 2021 Object detection interfaced with selective lossy image compression techniques to improve the efficiency of subsequent image operations and reduce the memory requirement for storing the images in autonomous applications of self-driving vehicles. |

|

|

|

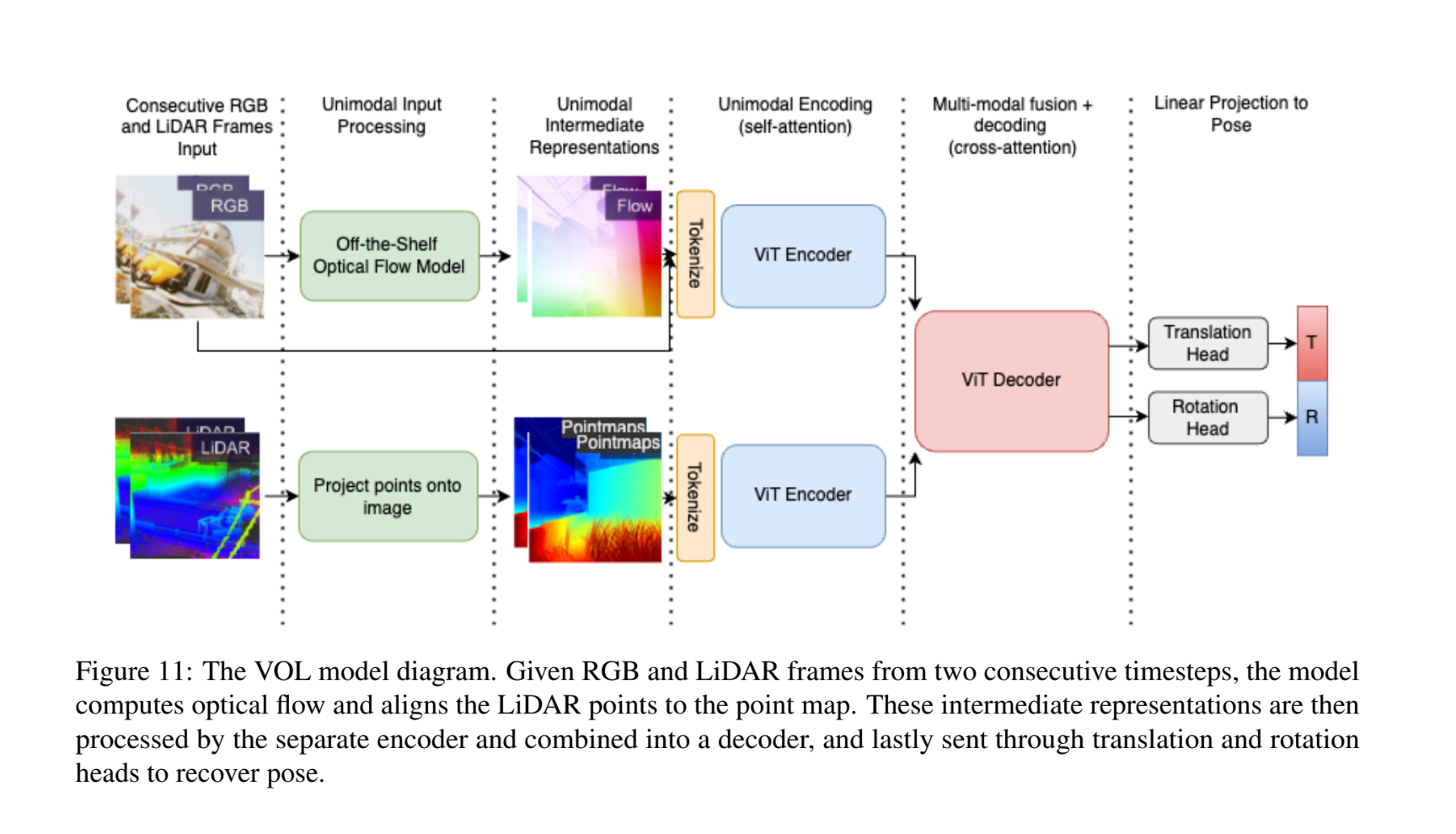

September - November 2024 Developed an odometry model combining vision and LiDAR for better motion tracking. Improved accuracy on KITTI and TartanAir datasets. |

|

January - May 2024 Built a robot to play Fruit Ninja using a 6-DOF arm and YoloV8 for object tracking. Achieved 94% accuracy and 0.6s reaction time. |

|

January - May 2024 Enhanced 3D object detection on the KITTI dataset using a fine-tuned YoloV8, achieving better accuracy with optimized training. |

|

July 2021 Designed a vision-based method to estimate tooth drill volumes for clinical use at MAIDS, New Delhi. |

|

Find more details about my projects and experiences here. |

|

Modified version of this website. |